What would shock Gauss most if he were to come back to life would be to learn that the studies he did to determine the eccentricity of the asteroid Juno (0.254236) are used today to multiply natural numbers.

Kolmogorov experienced this surprise firsthand. In the 1950s, when he was about 50 years old, influenced by the work of Wiener and Claude Shannon, he became interested in computer algorithms, and was among the first to become interested in the complexity of algorithms.

Kolmogorov set himself the problem of quantifying the work involved in carrying out an algorithm: how much more expensive is it to multiply two numbers of \(n\) digits than to add them together? The answer is to count how many elementary operations we have to perform. We could give a precise definition of what an elementary operation is, but I think that in what follows the intuitive ideas we have will be enough.

To add the two numbers we have to read each digit (because modifying a single digit would change the result), there are \(2n\) operations and we have to write the result, another \(n\) operations. The work with each pair of digits is more or less constant. Thus we see that the number \(S(n)\) of operations necessary to add two numbers of \(n\) digits verifies the constraints \(2n\le S(n)\le C\;n\) where \(C\) is a certain constant. We will say that the number of operations necessary for the sum is \(S(n)=O(n)\). That is, of the order of \(n\) operations.

What happens to the product? We denote by \(M(n)\) the order of elementary operations necessary to multiply numbers of \(n\) digits with the best possible algorithm. We also have that \(M(n)\ge 2n\) because the numbers, at least, have to be read. In the algorithm we learn at school, we have to do an elementary operation with each pair of digits, one from the multiplicand and the other from the multiplier. There are \(n^2\) pairs. The remaining work is of lower order. So we arrive at that the usual algorithm proves that \(M(n)\le c n^2\).

The usual algorithm is very ancient. So ancient that we don’t really know when it originated. Such an algorithm existed in Sumerian or Egyptian times, about 4000 years ago, and possibly goes back further in time. Kolmogorov thought that if a better algorithm existed, it would have been discovered by now. In his seminars he hypothesised that \(M(n)=O(n^2)\). That is, that the best method for multiplying numbers of length \(n\) would require of the order of \(n^2\) elementary operations.

Anatoly Karatsuba, a pupil of Kolmogorov, 23 years old at the time, tells in [2] what happened:

In the autumn of 1960 a seminar on problems in cybernetics was held at the Faculty of Mechanics and Mathematics of Moscow University under the direction of Kolmogorov. There Kolmogorov stated the \(n^2\) conjecture and proposed some problems concerning the complexity of the solution of linear systems of equations and some similar calculations. I began to think actively about the \(n^2\) conjecture, and in less than a week I found that the algorithm with which I was planning to derive a lower bound for \(M(n)\) gave a bound of the form

$$M(n)=O(n^{\log_23}),\qquad \log_23=1.5849\dots$$

At the end of the next seminar meeting I told Kolmogorov about the new algorithm and the refutation of the \(n^2\) conjecture. Kolmogorov was very agitated because this contradicted his very plausible conjecture. At the next meeting of the seminar, Kolmogorov himself told the participants about my method and at this point the seminar ended.

That’s 4000 years defeated in a week by a young, open mind, faced with a well-proposed problem. The analysis is interesting. Kolmogorov set himself the problem of determining the order of \(M(n)\). In the previous 4000 years a good method had been sought and found. Gauss, for example, did not consider improving it, it was a good method and one rarely had to multiply numbers of many digits. But Kolmogorov posed the problem of seeing that there is no better method, proving that \(M(n)\ge cn^2\). That is, he proposed to prove that no matter what method we use we will need elementary operations of the order of \(n^2\).

Then came Karatsuba with his open mind and tried to prove Kolmogorov’s conjecture. How to do it? His strategy was to assume \(M(n)\) and try to find a relation between \(M(n)\) and \(M(n/2)\). Then there is another question. If I know how to multiply numbers of \(n/2\) digits, how does this help me to multiply numbers of \(2n\) digits? As we will see this leads directly to the new algorithm.

Good questions and open-mindedness are two of the ingredients of mathematics.

Karatsuba’s algorithm

Karatsuba’s algorithm is an example of the general divide-and-conquer strategy. The idea is simple: let \(A\) and \(B\) be the numbers to multiply with \(2n\) digits; we separate them into groups of \(n\) digits

$$A=A_1 10^n+A_0,\quad B=B_110^n+B_0.$$

then

$$AB=(A_1 10^n+A_0)(B_110^n+B_0)=A_1B_1 10^{2n}+(A_1B_0+A_0B_1)10^n+A_0 B_0.$$

Let us note that

$$A_1B_0+A_0B_1=(A_1-A_0)(B_0-B_1)+A_1B_1+A_0B_0.$$

So we obtain

$$AB=(10^{2n}+10^n)A_1B_1+10^n(A_1-A_0)(B_0-B_1)+(10^n+1)A_0B_0$$

We have thus reduced the product of two numbers of \(2n\) digits to three products of numbers of \(n\) digits \(A_1B_1\), \(A_0B_0\) and \((A_1-A_0)(B_0-B_1)\) plus a few translations and additions. Then we have proved that

$$M(2n)\le 3M(n)+8S(n)\le 3M(n)+a\,n,$$

for a certain constant \(a\). By induction we prove that for a power of two \(n=2^m\) one has

$$M(2^m)\le M(1) 3^m +a(2^m+2^{m-1}3+2^{m-2}3^2+\cdots +2\cdot 3^{m-1})$$

and being \(2^\alpha=3\), that is, \(\alpha=\log_23\), we get

$$M(2^m)\le 3^m(M(1)+2a)=C\, 2^{\alpha m}=C(2^m)^\alpha.$$

Now for any \(n\), there exists \(m\)such that \(2^{m-1}\le n<2^m\) and any number of \(n\) digits can be considered a number of de \(2^m\) digits, then

$$M(n)\le M(2^m)\le C(2^{m})^\alpha=C2^\alpha (2^{m-1})^\alpha\le 3C n^\alpha.$$

This is a version of Karatsuba’s method, simplifying for exposition the method contained in [2].

New advances, the Schönhage-Strassen algorithm

After Karatsuba’s surprise, the problem of the true order of \(M(n)\) was posed. Toom and Cook (1966) extended Karatsuba’s ideas and succeeded in proving that \(M(n)=O(n(\log n) e^{c\sqrt{\log n}})\). We will focus in the following on explaining the Schönhage-Strassen (1971) method, which is the one used in practice today when you want to multiply really large numbers, for example with hundreds of thousands or even billions of digits.

All known methods are based on one idea:

Multiplying numbers is equivalent to multiplying polynomials.

The factors are written in a base, actually the multiplication methods use the base \(2\), but we illustrate it with our more familiar decimal base. The notation 325 for example means

$$3\times 10^2+2\times 10+5=p(10), \quad\text{where}\quad

p(x)=3x^2+2x+5.$$

And multiplying two numbers is almost equivalent to multiplying two polynomials. For example

$$\begin{array}{lllll}

& & 3 & 2 & 5\\

& & 2 & 7 & 3\\ \hline

& & 9 & 7 & 5\\

2 & 2 & 7 & 5 & \\

6 & 5 & 0 & & \\ \hline

8 & 8 & 7 & 2 & 5

\end{array}

\qquad\qquad

\begin{array}{rrrrr}

& & 3x^2 & +2x & +5\\

& & 2x^2 & +7x & +3\\ \hline

& & 9x^2 & +6x & +15\\

& 21x^3 & +14x^2 & +35x & \\

6x^4 & +4x^3 & +10x^2 & & \\ \hline

6x^4 & +25x^3 & +33x^2 & +41 x & 15

\end{array}

$$

The product of polynomials is more orderly, there are no transfers from one column to another. Moreover, it is practically equivalent because the polynomial product evaluated at \(x=10\) gives us the value of the product of the numbers. In fact, knowing the coefficients of the polynomial product, the product can be obtained, as can be seen in the following diagram.

$$\begin{array}{lllll}

& & & 1 & 5\\

& & 4 & 1 & \\

& 3 & 3 & & \\

2 & 5 & & & \\

6 & & & & \\ \hline

8 & 8 & 7 & 2 & 5

\end{array}

$$

The coefficients of the polynomial product will be less than \(nb^2\) if we are working in base \(b\), and the number of elementary operations to obtain the product from the polynomial will be approximately \(n\log(nb^2)\).

In the Schönhage-Strassen method, if we want to multiply numbers of \(n\) binary digits, we look for numbers \(k\) and \(\ell\) such that \(2n\le 2^k \ell<4n\) and then we work with numbers of \(2^k\) digits in base \(b=2^\ell\). Thus, obtained the product of the polynomials, we need \(2^k \log(2^k 2^{2\ell})\approx 2^k(k+2\ell)\). Taking \(k\) and \(\ell\) of the same order we get the product with \(O(n)\) elementary operations, assuming the product of polynomials is known.

Convolution and Fourier Transform

Let’s remember that to multiply numbers of \(n\) digits we choose \(2n\le 2^k \ell<4n\) and we work in base \(b=2^\ell\), that is to say grouping the digits of the factors in groups of \(\ell\) binary digits.

The factors will have approximately \(n/\ell<2^{k-1}\) digits in base \(2^\ell\). Defining \(K=2^k\) we can write the factors in the base \(b\) in the form

$$(U_{K-1}, U_{K-2},\dots, U_0)_b, \quad (V_{K-1}, V_{K-2},\dots, V_0)_b.$$

Since they were numbers of less than \(K/2\) digits, we will have \(U_j=V_j\) for \(j\ge K/2\). We have done that intentionally because of the following.

We must calculate the coefficients of the polynomial product

$$\begin{array}{l}P(x)=U(x)V(x)=&(U_{K-1}x^{K-1}+U_{K-2}x^{K-2}+\cdots +U_1x+U_0)

\\&\times (V_{K-1}x^{K-1}+V_{K-2}x^{K-2}+\cdots +V_1x+V_0)\end{array}$$

which are the numbers

$$P_r=U_rV_0+U_{r-1}V_1+\cdots + U_0V_r$$

since we have added the \(U_j=V_j=0\) for the large \(j\) we have that this is equal to the convolution in the group \(\mathbf{Z}/(K\mathbf{Z})\) of the numbers modulo \(K\), that is to say that

$$P_r=\sum_{a+b=r} U_aV_b.$$

The convolution operation is closely related to the Fourier transform. In the case of the finite group the transform of \((U_j)_{j=0}^K\) is the sequence

$$

\widehat{U}_a=\sum_{j=0}^{K-1} U_j \omega^{aj},\qquad \omega=e^{-2\pi i/K}.\qquad (1)

$$

That is

$$\widehat{U}_a=U(\omega^a),\quad \widehat{V}_a=V(\omega^a)$$

are the values of the polynomials \(U(x)\) and \(V(x)\) at the unit roots.

From this follows the fundamental property of the transform and the convolution. Since the polynomial \(P(x)=U(x)V(x)\), we will have that the transform of the convolution is the product of the transforms

$$P(\omega^a)=U(\omega^a)V(\omega^a),\quad\text{that is}\quad\widehat{P}_a=\widehat{U}_a\widehat{V}_a.$$

So, not the \(P_a\) coefficients, but their Fourier transforms are easily obtained by just \(K\) products.

To the rescue then comes the fact that the inverse of the transform is essentially another Fourier transform

$$P_a=\frac{1}{K}\sum_{j=0}^{K-1}\widehat{P}_a\omega^{-aj}.$$

We then have the following scheme for multiplying numbers. Find the Fourier transform of the factors \(\widehat{U}_a\) and \(\widehat{V}_a\). Form \(K\) products \(\widehat{P}_a=\widehat{U}_a\widehat{V}_a\) and perform a new (inverse) Fourier transform to obtain the product coefficients.

The problem is that as we have defined the transform in (1), we need \(K^2\) products, and this is too large. Here we are helped by a technique that was already known to Gauss but was rediscovered and popularised by Cooley and Tukey in 1965.

Fast Fourier Transform

The FFT (Fast Fourier Transform) technique is complicated. Its practical utility extends to many fields of technology. Any procedure in which the Fourier transform is applied takes advantage of it. Gauss [1] studied it in connection with his astronomical calculations. Gauss wanted to determine the orbit of an asteroid from a series of measurements. He expressed the orbit as a trigonometric series and wanted to obtain the coefficients from the measurements. This is actually a Fourier transform.

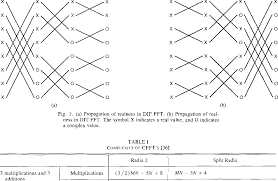

FFT is based on the Danielson-Lanczos identity. Assuming \(K\) even, we have

$$\widehat{U}_a=\sum_{j=0}^{K-1} U_j \omega^{aj}=\sum_{j=0}^{K/2-1}U_{2j} (\omega^2)^{aj}

+\omega^a\sum_{j=0}^{K/2-1}U_{2j+1} (\omega^2)^{aj}.$$

This equality expresses a Fourier transform, requiring \(K^2\) products, by two transforms of half length requiring \(2(K/2)^2=K^2/2\) multiplications. If \(K\) is a power of two, we can iterate the procedure and in the end we get a method of calculating the transform that requires only \(O(K\log K)\) operations.

I insist that there are entire books dedicated to the FFT technique and that therefore our explanation, although correct, lacks some details.

Algebra and the game of rings

We come to the last ingredient of the Schönhage-Strassen method. In the way we have described it, it has been successfully implemented. In it the ring of complex numbers has been used. The unit root \(\omega\) is a complex number, the calculations have to be done in \(\mathbf{C}\) and the transforms \(\widehat{U}_j\) are complex numbers. This makes it necessary to work with approximate numbers and requires a rigorous study of the errors, apart from the fact that there is a bit of luck in that the errors do not propagate too much in the calculations to be carried out.

But it is possible to replace the field \(\mathbf{C}\) by a ring in which we have a primitive root \(K\)-th of unity and in which \(K\) is invertible. With this we can define the transform by substituting the complex number \(\omega\) for the root of unity. The above formulae are still valid.

For example one can use the ring \(\mathbf{Z}((2^m+1)\mathbf{Z})\) taking \(m>k+2\ell\). Thus the numbers \(P_a\mod (2^m+1)\) allow us to recover \(P_a\) since \(P_a<2^m\).

We take \(m\) to be a multiply of \(K\), so \(\omega=2^{2m/K}\) is a primitive root of unit with order \(K\). The choice given \(n\) of \(k\), \(\ell\) \(K\), and \(m\) is one of the practical problems of the Schönhage-Strassen method. But good choices ensure that the method performs with \(O(n\log n\log\log n)\) elementary operations.

Subsequent progress on the value of M(n)

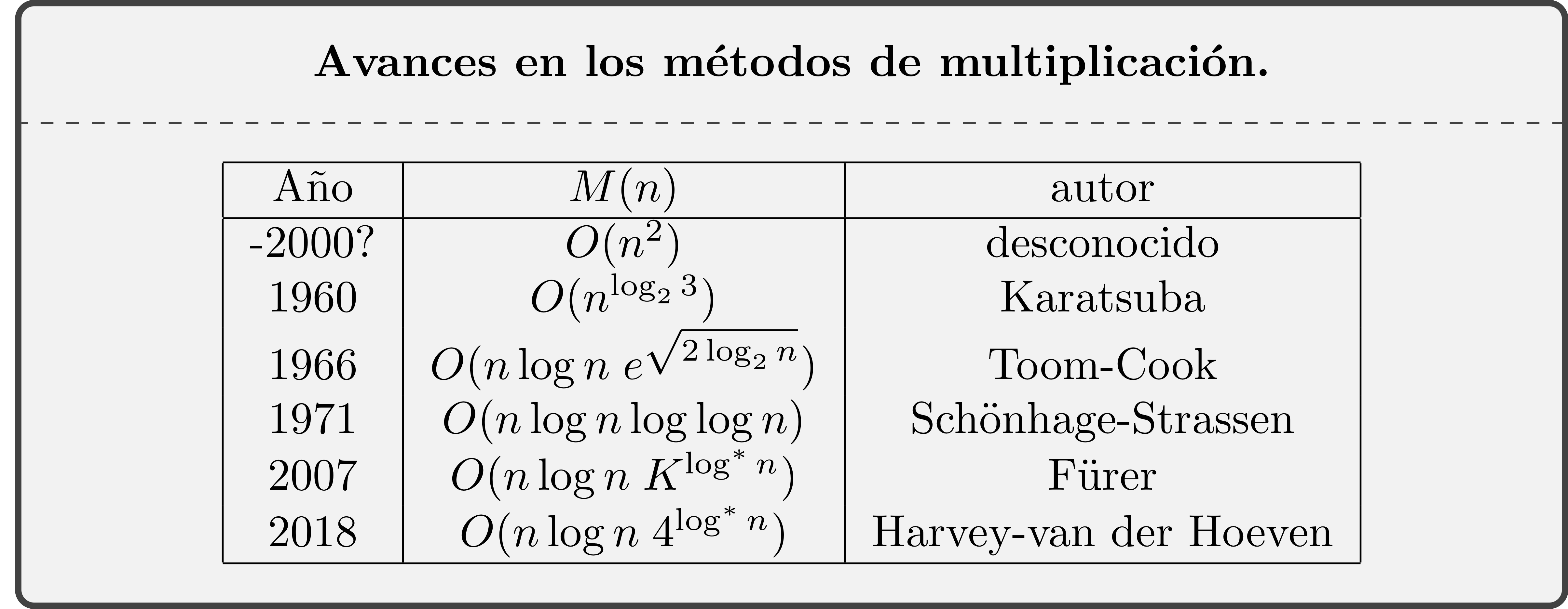

Kolmogorov’s conjecture was not valid, and Karatsuba’s result proves it. But then what is the true order of \(M(n)\)? We do not know, since Karatsuba’s result better algorithms have been constructed that lower the upper bound of \(M(n)\). Subsequent advances to the Schönhage-Strassen method have had no practical impact. First, they are only slight modifications of the Schönhage-Strassen method from which they mainly differ in the choice of the ring or rings on which they work.

Secondly, the advances concern the last factor in \(n\log n\log\log n\). It is replaced by another factor that grows more slowly, but since \(\log\log n\) is so slow growing, the advantage would only apply to numbers that are not really within the reach of today’s computers.

To explain how far these improvements go, we must define a very slow, extremely slow function \(\log^*x\), defined for \(x\ge1\). Given \(x>1\), let’s say \(x_0=x\), \(x_1=\log x\), \(x_2=\log x_1\), \(\dots\), \(x_n=\log x_{n-1}\), stopping at the point where we find the first \(x_n\le 1\). Then we define \(\log^*x=n\). The function \(\log^* x\) takes the value \(1\) at the interval \((1,e]\), \(\log^*x=2\) for \(x\in(e,e^e]\), \(\log^*x=3\) for \(x\in(e^e, e^{e^e}]\), \(\dots\) takes the value \(4\) when \(x\) has less than 1656520 digits. The function \(\log^*x\) tends to infinity but in the laziest way imaginable (if we were not mathematicians, who have an unimaginable imagination).

We are now in a position to explain the evolution of our knowledge about \(M(n)\).

We wrote this post on the occasion of the appearance of Harvey and van der Hoeven’s paper [11] on arXiv in February this year. Previous work had achieved multiplication in \(O(n\log n\; 4^{\log^*n})\) elementary operations, but assumed the existence of certain primes that were necessary to construct the rings they were working on. This made the result conditional, because although the right primes were supposed to exist, this was not proven. In this work, suitable rings are obtained without the need to hypothesise about the existence of certain primes.

There is one question I should not fail to ask before

Problem 1. Is it actually \(M(n)\ge C n\log n\)?

For addition we know the exact order since it is clear that \(S(n)>Cn\), because at least we have to read the data, and the usual method of addition gives an upper bound of the same order. In our question it is necessary to consider any possible method for multiplying and see that they all need to do elementary operations of order \(n\log n\). These kinds of problems are currently very difficult.

Learn more

The historical aspect of how Gauss was the first to define the FFT technique is well-developed in

[1] Heideman, M.T., Johnson, D.H., y Burrus, C. S., Gauss and the History of the Fast Fourier Transform, IEEE ASSP Magazine 1 (4) (1984) 14-21.

PDF version.

I got the Karatsuba story from

[2] Karatsuba, A. A., The Complexity of computations, Proc. Steklov Inst. Math., 211 (1995), 169-183.

PDF version.

This story has a second, somewhat unfortunate, part. Karatsuba tells it, but we can see some details and/or comments in:

[3] Question on the Kolmogorov-Karatsuba relation in Stackexchange on the theory of computation.

The Schönhage-Strassen method is discussed in various sources. We particularly recommend

[4] Knuth, D. E. The art of computer programming. Vol. 2. Seminumerical algorithms, Third edition. Addison-Wesley, Reading, MA, 1998.

or

[5] Crandall, R., Pomerance, C., Prime numbers. A computational perspective, Second edition. Springer, New York, 2005.

The original paper in German:

[6] Schönhage, A.; Strassen, V., Schnelle Multiplikation grosser Zahlen, Computing (Arch. Elektron. Rechnen) 7 (1971), 281-292.

For those who want more detailed information, it may be helpful

[7] Gaudry, P., Kruppa, A., Zimmermann, P., A GMP-based implementation of Schönhage-Strassen’s large integer multiplication algorithm, ISSAC 2007, 167–174, ACM, New York, 2007. PDF version.

Other works I have consulted for this post:

[8] Pollard, J. M., The Fast Fourier Transform in a Finite Field, Math. of Comp. 25, (1971) 365-374. PDF version.

[9] Toom, A. L., The complexity of a scheme of functional elements simulating the multiplication of integers, Soviet Math. Dokl., 3 (1963) 714–716.

On his website you can find the PDF of the article with some other interesting material.

[10] Fürer, M., Faster integer multiplication, STOC’07—Proceedings of the 39th Annual ACM Symposium on Theory of Computing, 57–66, ACM, New York, 2007.

PDF version.

[11] Harvey, D. y J. van der Hoeven, Faster Integer multiplication using short lattice vectors. febrero 2018, arXiv:1802.07932.

Leave a Reply