So far all methods of multiplying numbers of n digits take a time of the order of n(logn)f(n) with f(n) being a function tending to infinity with n slower than logn. A conjecture of Schönhage and Strassen states that any multiplication method will need at least n(logn). An algorithm for multiplying precisely in time of the order of n(logn) has just been published. We already know how to multiply! This month we have learned how to multiply efficiently.

The authors are David Harvey of the University of New South Wales in Sydney and Joris Van Der Hoeven of the Laboratoire d’informatique de l’École polytechnique. We comment today on their feat.

It is surprising that the new method is so enormously complicated. There remains the daunting task of simplifying it.

This is a giant step in the solution of one of the basic questions of mathematics that has been pending for millennia. The usual algorithm, which we learned at school, takes a time of the order of n2. But computers have long since used the primitive method only for multiplying small numbers. To multiply large numbers they use free implementations (GNU Multiple Precision Arithmetic Library) of the second Schönhage-Strassen algorithm dating from 1971. This algorithm multiplies in a time of the order of n(logn)(loglogn).

Schönhage and Strassen in 1971 already conjectured that the time actually needed must be nlogn, (the time needed to perform a fast Fourier transform operation on the data of the problem). If this conjecture is true, we have the definitive algorithm from the point of view of theoretical efficiency.

We must explain at this point the difficulty of proving that we really need a time of the order nlogn. It is one thing to imagine an algorithm and to study concretely how long this algorithm takes (this is what has just been done). It is quite another to imagine a task in the abstract (in this case multiplying two numbers) and to prove that any method that performs it will take some time (in this case nlogn). The difficulty is that we don’t know what the algorithm is.

General idea of algorithms for multiplication

Our problem is to multiply integers. We are interested in algorithms that do this as quickly as possible. This depends on the size of the numbers. So we make the problem precise and what we want is to multiply numbers of n digits and what we are really interested in is how complicated the task becomes as n increases. We measure this complication by giving an idea of how the time needed to perform the task grows.

The algorithm we learned at school is very suitable for numbers of a few digits. But there are better methods. All these methods have some common characteristics that we are going to analyse:

Recurrence

All these methods reduce the product of the two n digits to a certain number of products of smaller numbers.

For example, the usual method reduces the product to n2 products of one-digit numbers. Then the results must be properly summed, but this is a relatively minor task. Then if M(n) denotes the time needed to multiply two numbers of n digits we get M(n)<n2M(1).

We have already seen in another post: Multiplication is easier than you think, how Karatsuba’s algorithm reduces the product of two numbers of n digits to three products of numbers of n/2 digits and a remainder due to the sums with which M(n)<3M(n/2)+an

The other known methods make use of several ideas.

Polynomial product

The first idea, which we explained then, is based on the fact that each number written in the decimal system is the value of a polynomial p(x) in x=10. For example 237=2⋅102+3⋅10+7=p(10) where p(x)=2x2+3x+7. In this way we can reduce multiplying two numbers to the problem of multiplying two polynomials. (See the previous post Multiplication is easier than you think, for a more detailed explanation).

Fast Fourier Transform

To obtain the product C(x)=A(x)B(x) of two polynomials of degree n, we can follow the strategy of calculating at n+1 points mk=A(xk)B(xk) and knowing this find the polynomial C(x) such that it satisfies the equations C(xk)=mk. This seems more complicated than doing the product directly. But if we choose xk the n+1 n+1-th roots of unity, calculating A(e2πik/(n+1)) is like calculating the Fourier transform of the coefficients of A. It turns out that there is a procedure, discovered by Gauss, which allows these operations to be carried out in nlogn time. It is the fast Fourier transform (FFT).

All known really fast methods depend on the FFT. From this arises the conjecture that the most we can aspire to is a method of the order of nlogn. It is also supported by the fact that this lower bound has been proven in the case of on-line multiplication, where the k-th bit of the product must be written before the (k+1)-th bit of the factors is read.

The game of rings

We see that we have moved from the problem of multiplying integers (elements of the ring Z of integers), to the problem of multiplying polynomials (elements of the ring Z[x]). Then by using the roots of unity, we have transformed the product to a product in the ring of complex numbers C.

But algebra is full of interesting rings. And the history of multiplication methods has also been a history of starring rings.

The latest ideas are to use rings where there are roots of unity simpler than the ring of complex numbers.

Earlier multiplication methods

The first Schönhage-Strassen method

Essentially the one described above. It converts the product into a product of polynomials, with coefficients of size logn. And use the unit roots of C, to perform the FFT. This leads to the recurrence M(n)≪nM(logn)+nlogn.

This recurrence leads to a time of the type M(n)≈(nlogn)f(n) where f(n) is a complicated function of n, which tends to infinity with n, but slower than the logarithm.

Second Schönhage-Strassen method

This is the one currently used in computers. It replaces C by the ring Rh=Z/(2h+1)Z of the remainders modulo 2h+1. This ring has roots of unity suitable for performing the FFT. To multiply numbers of n digits we reduce the problem to multiplying polynomials Rh[x] whose degree is of order √n and take h also of order √n.

In this case we arrive at the bound M(n)≈nlognloglogn.

There are other later methods that improve the function f(n) into M(n)≈(nlogn)f(n), using other rings that combine the best features of the two previous methods.

The new method

Polynomials in several variables

The new method introduces two ideas. The first is to use a ring of polynomials in several variables of the type R[x1,…,xd]/(xt11−1,…xtdd−1),R=C[y]/(yr+1),

But there remains the problem of how we convert the integer multiplication problem to use these rings of many variables. What we do is to find primes s1, …, sd each of size (n/logn)1/d and such that the product s1⋯sd≈(n/logn). In the usual way we reduce the problem to multiplying polynomials of degree s1⋯sd and coefficients of size logn. Using the Chinese remainder theorem, thanks to the sj being prime, we see that Z[x]/(xs1…sd−1)

Gaussian Resampling

Finally there is still the problem that the FFT must use powers of 2 and the primes sj are not. I think this is the most surprising idea of the method.

The coefficients of our polynomials, being of several variables depend on d numbers ∑nic(n1,n2,…,nd)xn1xn2…xnd,

To see in a simpler way what they do, let’s think of the simplest case of d=2. In that case we can think of our data c(n1,n2), as values of a function at the points of a lattice contained in a rectangle of size s1×s2. It is as if we had the values of a function taken on a lattice of s1×s2 equally spaced points.

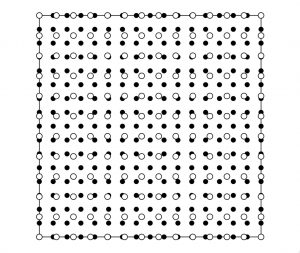

In their work they give the example of s1×s2=13×11. In the figure our c(n1,n2) correspond to the data in the white circles. They superimpose a lattice of size 16×16 (the black circles in the figure) and associate with each of these points a value that is a linear combination of the c(n1,n2) values in the nearby white points. They take Gaussian weights so that each new coefficient depends essentially only on the very close ones.

It is peculiar that they have to make use of the prime number theorem to choose the primes sj, each one close to tj a power of 2. To do this they must use a theorem of J. B. Rosser and L. Schoenfeld from 1962. Rosser and Schoenfeld used the then known results that the first zeros of the zeta function lie on the critical line.

So the presented algorithm needs to use calculations of the first zeros of the zeta function to justify that it processes in the required time.

Naturally the method is not practical as it stands now. The advantage over the second Schönhage-Strassen method – nlogn versus nlognloglogn – is too small. As described, the new algorithm is difficult to implement in practice. There is a lot of simplifying to do until the method becomes practical, if ever.

Learn more

The article is hard to read, it has a lot of technical difficulty. But for those who want to have a look at it, it is freely available on the net:

David Harvey, Joris Van Der Hoeven, Integer multiplication in time O(nlogn).

There is still little impact on the net, but Richard Lipton already has an entry in his well-known blog “Gödel’s Lost Letter and P=NP”: Integer multiplication in NlogN Time.

Leave a Reply